Errors of omission in Home Office statistical releases

There is still room for improvement in the statistical sophistication of Home Office press releases, but very few are as bad as the notorious “knife crime” release issued on 11 December 2008.

That is the conclusion of a review by the Home Office's own Surveys, Design and Statistics Subcommittee, which set the bar high in rating 203 press releases issued in the months February to September 2008 and the same period in 2009.

The aim was to see if the censure issued in January 2009 by the UK Statistics Authority after the knife crime row had led to an improvement in the reporting standards, by comparing comparable periods before and after the censure was issued. The study was powered to detect a one third reduction in the numbers of omitted supporting details in press releases.

A list of 29 omissions (lacunae) was prepared, against which the press releases were judged. The possible lacunae included issues such as publishing current numbers, but no previous numbers to compare them against; claiming a percentage change but including no actual numbers; claiming success in a policy without numbers to back the claim; missing methodology; claiming a trend without presenting evidence; and failing to define terms sufficiently.

Assessors were randomised into pairs, and each pair to four out of the eight months in each period. Pairmates were consistent in their recording of the number of lacunae.

The conclusion was that 40 per cent of press releases (82 out of 203) had no statistical omissions, while the other 60 per cent did. This did not mean, the report says, that the statistics in the releases were wrong, but that their presentation fell short of best practice. For the 60 per cent, the average number of lacunae was 2.7, and only 13 had six or more.

None of the 203 had as many as the knife-crime press release and accompanying fact sheet, which were scored as having 34 lacunae. So the study did show that it was exceptional.

However, it failed to show any change between 2008 and 2009. Mean counts were 1.7 lacunae per press release for 78 releases in 2008 (standard deviation 3.5) and 1.5 per press release (sd 1.8) for 125 in 2009.

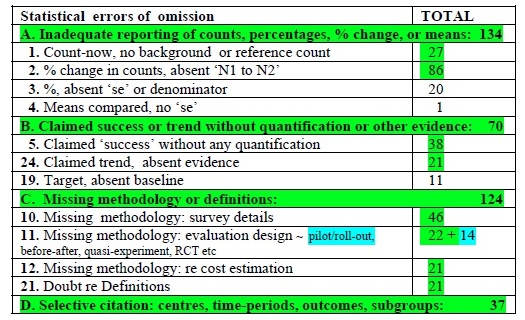

The committee prepared a simplified list of statistical errors of omission categorised into four categories, A,B, C and D. The table below lists them and the number of press releases that failed the test in each case. The first three categories (ABC) include the top eight errors identified, so getting them right should be more than enough to achieve a one-third reduction in omissions. Category D is included because selective citation is so undermining of trust.

Should the Home Office statisticians and press officers feel at all sore at these conclusions they can console themselves by reading a report in the March 27 BMJ by Sally Hopewell of the Centre for Statistics in Medicine at Oxford and colleagues of the quality of reporting of randomised control trials in medical journals in 2000 and 2006. (BMJ 340, p697, 27 March).

They found that, despite the existence of guidelines on reporting results, many published papers fall woefully short. Only 53 per cent in 2006 (324 out of 616) reported the primary outcome; 45 per cent the sample size calculation; 34 per cent the method of random sequence generation; 25 per cent allocation concealment; and 25 per cent blinding details.

While this was an improvement on the 2000 scores, “the quality of reporting remains well below an acceptable level” the team concludes.

The study is itself be open to at least one criticism, however, since the assessments of the 2000 and 2006 papers were made by different teams of reviewers. What, no randomisation? Tut tut.

Competing interests: Sheila Bird, a director of Straight Statistics, chaired the sub-committee responsible for the report on Home Office press releases.